EDIT: I called it! Turns out this was much closer to reality than I thought and a paper came out doing exactly this.

Assaying enzymes is rather laborious and, even though the data quality is much higher it does not compete in productivity with the other fields of biochemistry and genetics. So I gave some thought into where I believe enzymology will be in the future and I have come to the conclusion that in vitro assays will be for the most part seriously replaced by in vivo omics methods, but not any time soon as both proteomics and metabolomics need to come along way, along with systems biology modelling algorithms.Uncompetitively laborious

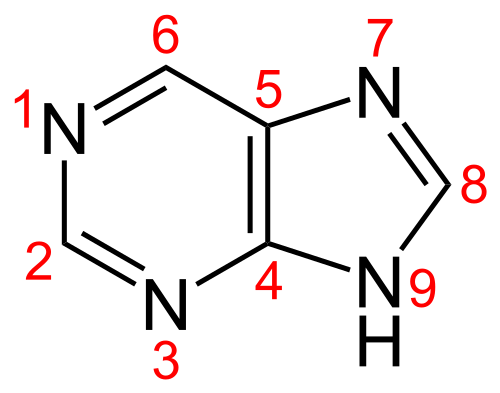

Everyone that has assayed enzymes will tell you that a single table in a paper took years and years of assays. They will tell you horror stories that the enzyme did not express solubly, the substrate took months to make, the detection required a list of coupled enzymes or that the activity was so low that everything had to be meticulously calibrated and assayed individually. Personally, I had to assay Thermotoga maritima MetC at 37°C due to the fact that for one reaction the indicator decomposed at 50°C, while for another activity the mesophilic coupled enzymes would otherwise denature. All while comparing it to homologues from Wolbachia and Pelagibacter ubique, which had to be monitored by kinetic assay —as they melted if you looked at them— and individually as the Wolbachia MetC had a turnover of 0.01 s-1 (vestigial activity; cf. my thesis). And I was lucky as I did not have substrates that were unobtainable, unstable and bore acronyms as names.The data from enzyme assays is really handy, but the question is how will fair after 2020?

The D&D 3.5 expression "linear fighter, quadratic wizard", which encapsulate the problem that with level progression wizards left fighters behind, seems rather apt as systems biology and synthetic biology seem to be just steaming ahead (quadratically) leaving enzymology behind.

Enzymomics?

Crystallography is another biochemical discipline that requires sweat and blood. But with automation, new technologies and a change of focus (top down), it is keeping up with omics world.Enzymology I feel isn't. There is no such field as enzymonics —only a company that sells enzymes, Google informs me.

A genome-wide high-throughput protein expression and then crystallographic screen may work for crystallography, but it would not work for enzymology as each enzyme has its own substrates and the product detection would be a nightmare.

This leads me to a brief parenthesis: the curious case of Biolog plates in microbiology. They are really handy as they are a panel of 96-well plates with different substrates and toxins. These phenotype "micro"arrays are terribly underutilised, because each plate inexplicably costs $50-100. Assuming that someone made "EC array plates" where each well tested an EC reaction a similar or worse problem would arise.

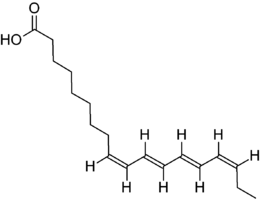

That is fine as a set of EC plates would be impossible to make as to work each well would need a lyophilised thermophilic enzyme that was evolved to generate a detectable change (e.g. NADH or something better still) for a specific product in order to do away with complex chains of coupled enzymes that may interfere with the reaction in question along with the substrate, which is often unstable. Not to mention that EC numbers are rather frayed around the edges, I think the most emphatic example is the fact that the reduction of acetaldehyde to ethanol was the first reaction described (giving us the word enzyme) and has the EC 1.1.1.1, while the reduction of butanal to butanol is EC 1.1.1.– as in, no number at present.

Therefore, screening cannot will with same format as crystallography.

Parallel enzymology

Some enzyme assays are easy, especially for central metabolism. The enzymes are fast, the substrates purchasable and the reaction product detectable. As a result, with the reduction of gene synthesis costs —currently cheaper than buying the host bug from ATCC and way less disappointing than emailing authors— panels of homologues for central enzymes can be tested with ease. There are some papers that are starting to do that and I am sure that more will follow. That is really cool, however, it is the weird enzymes that interests scientist the most.

In silico modelling

Even if it would seem like a straightforward thing, it is currently near impossible to determine in silico the substrate of an enzyme or the kinetic parameters with an enzyme structure and its substrate. The protein structure predictions are poor at best and in silico docking to find the substrates is not always reliable, although a few papers have found the correct substrate starting from crystal structures of the enzymes. Predicting the kinetic parameters requires computationally very heavy quantum-mechanical molecular dynamics simulations and the result would be an approximation at best. What is worse is that all these programs, from Autodock to Gaussian. are challenging to use, not because they present cerebral challenges, but they are simply very buggy. Furthermore, the picture would be only partial.

Deconvoluted in vivo data

Genetic engineering, metabolomics and proteomics might come to the rescue.Currently, metabolomics is more hipster avant-garde than mainstream. The best way to estimate the intracellular concentration of something in the micromolar range is to get the Michaelis constant of the enzyme that uses it —Go enzymology!—. But it is just a matter of time before one can detect even nanomolar compounds arising from spontaneous degradation or promiscuous reactions —"dark metabolome" if you really wanted to coin a word for it and write a paper about it.

Also, currently, flux balance analysis can be constrained with omics data —in order of quality: transcriptomics, proteomics and metabolomics data. If the latter two datasets were decent, systems biology models would need to come a long way before one could estimate from a range of conditions a rough guess of the kinetic parameters of all enzymes in the genome. The current models are not flexible or adaptive: one builds a model and the computer finds the best fitting equation and to do that they require fancy solvers. Then again, the data is lacking there and are not as CPU heavy as phylogeny or MD simulations. Consequently, they are poor benchmarks: if perfect proteomics and metabolics data were available, it would take Matlab milliseconds to find the reaction velocity (and as a consequence the catalytic efficiency) of all the enzymes in the model. Add a second condition (say a different carbon source or a knockout) and, yes, one could get better guestimates, but issues would pop up, like negative catalytic efficiencies. The catch is that some enzymes are inhibited, others are impostors in the model and other unmarked enzymes catalysing those reactions may result in subpar fits. Each enzyme may be inhibited by one or more of more than five thousand protein or small compounds in a variety of fashions and any enzyme may catalyse the same reaction secretly.

The maths would get combinatorially crazy quite quickly, but constrains and weights could be made, such previously obtained kinetic data, the similarity of Michaelis constant and substrate concentration or even extrapolation from known turnover rates for known reactions of that subclass.

Questioning gene annotation would open up a whole new bag of worms as unfortunately genome annotation does not have a standardised "certainty score" —it would be diabolically hard to devise as annotations travel like Chinese whispers—, so every gene would have to be equally likely to be cast in doubt, unless actual empirical data were used. So in essence it would have to be a highly interconnected process, reminiscent of the idealistic vision of systems biology.

Nevertheless, despite the technical challenges it is possible that with a superb heuristic model-adapting algorithm and near-perfect omics profiles under different conditions pretty decent kinetic parameters for all the enzymes in a cell could be done —also giving a list of genes to verify by other means. When such a scenario would be mainstream is anyone's guess, mine is within the next ten to fifteen years.